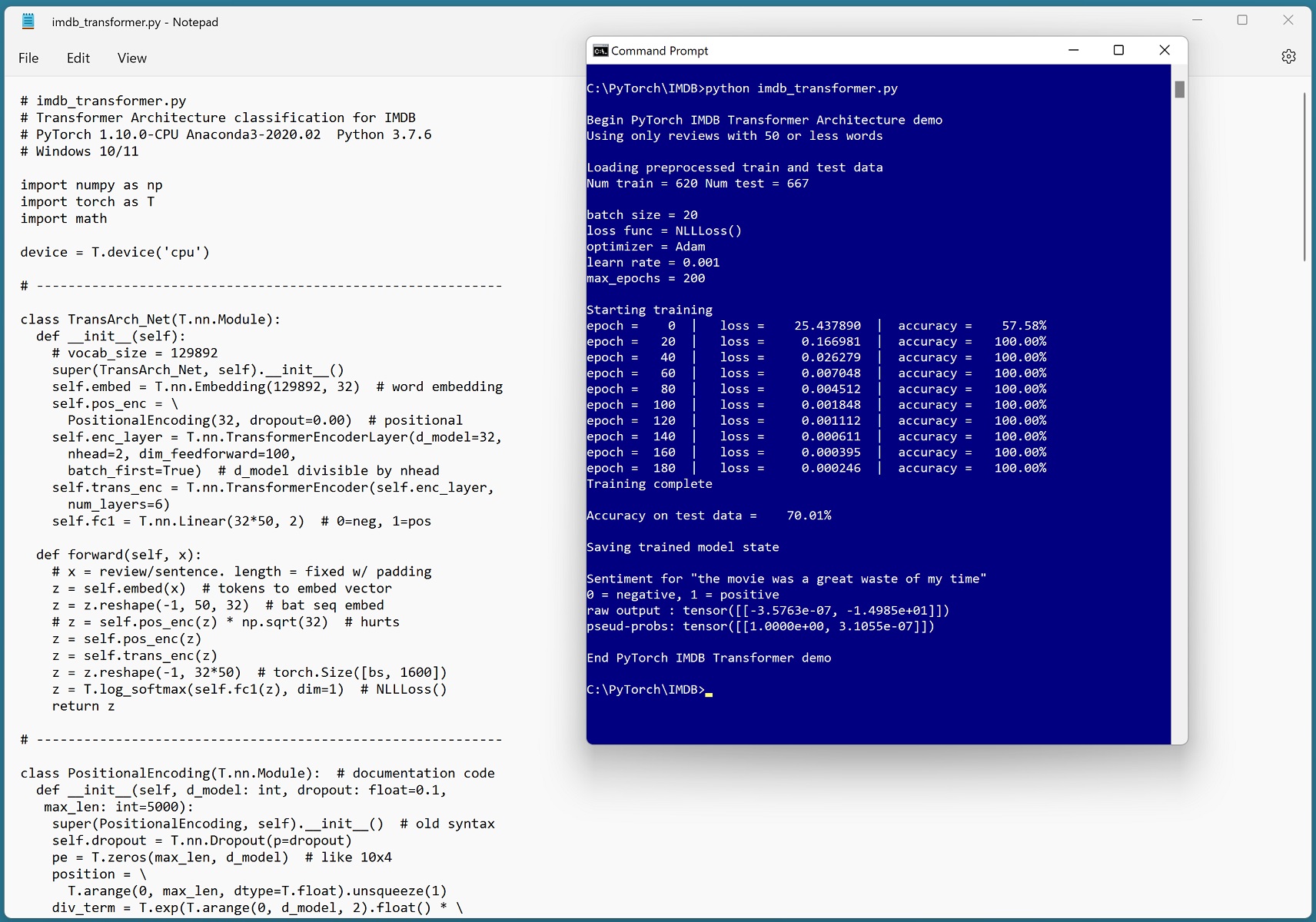

IMDB Classification using PyTorch Transformer Architecture

Por um escritor misterioso

Last updated 31 dezembro 2024

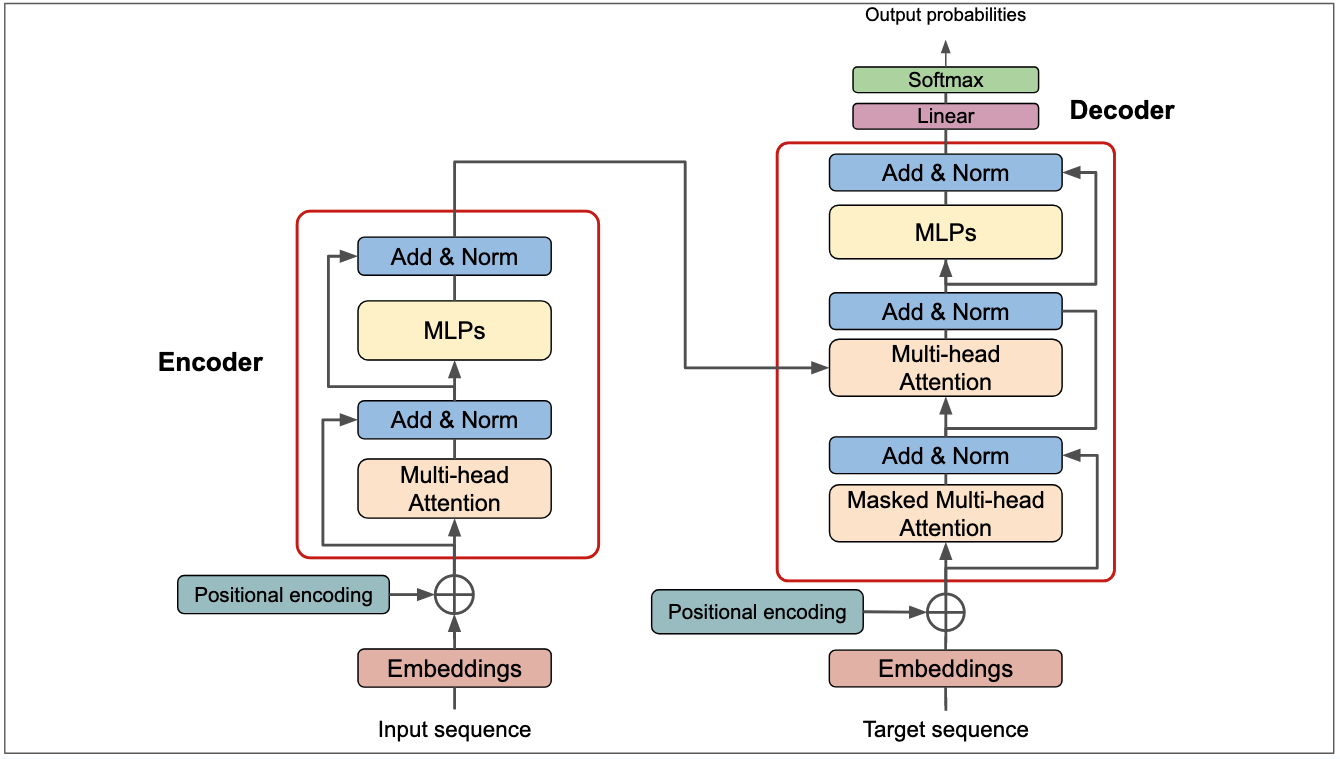

I have been exploring Transformer Architecture for natural language processing. I reached a big milestone when I put together a successful demo of the IMDB dataset problem using a PyTorch TransformerEncoder network. As is often the case, once I had the demo working, it all seemed easy. But the demo is in fact extremely complicated…

AI Research Blog - The Transformer Blueprint: A Holistic Guide to

Text Classification using PyTorch

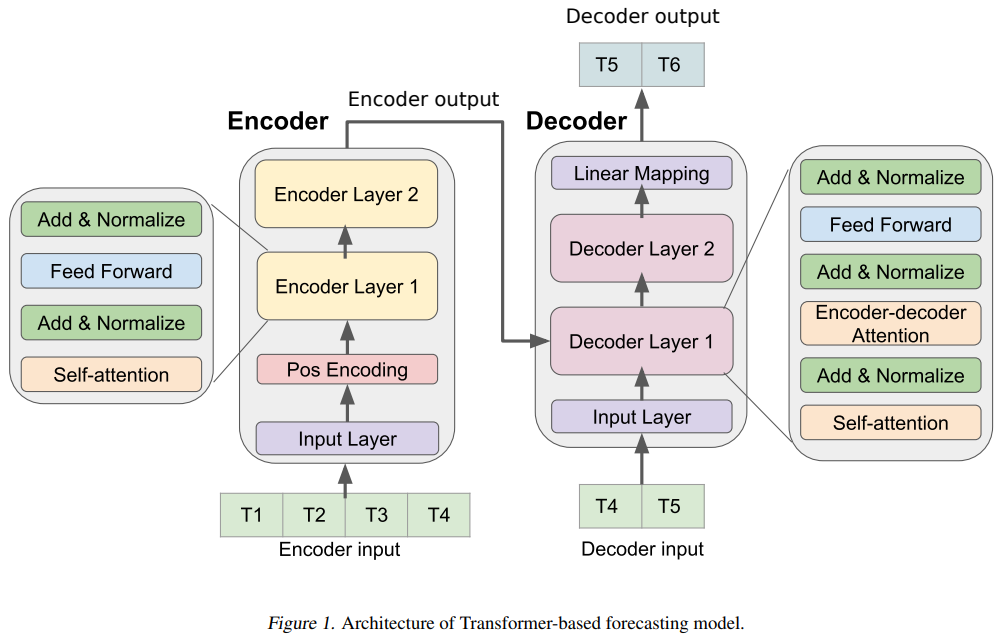

How to make a Transformer for time series forecasting with PyTorch

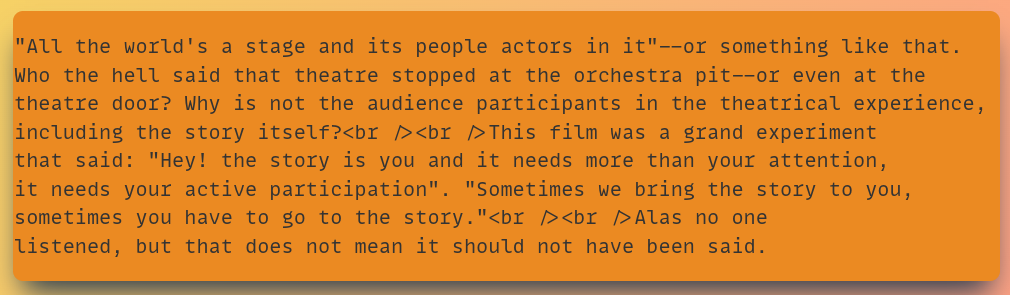

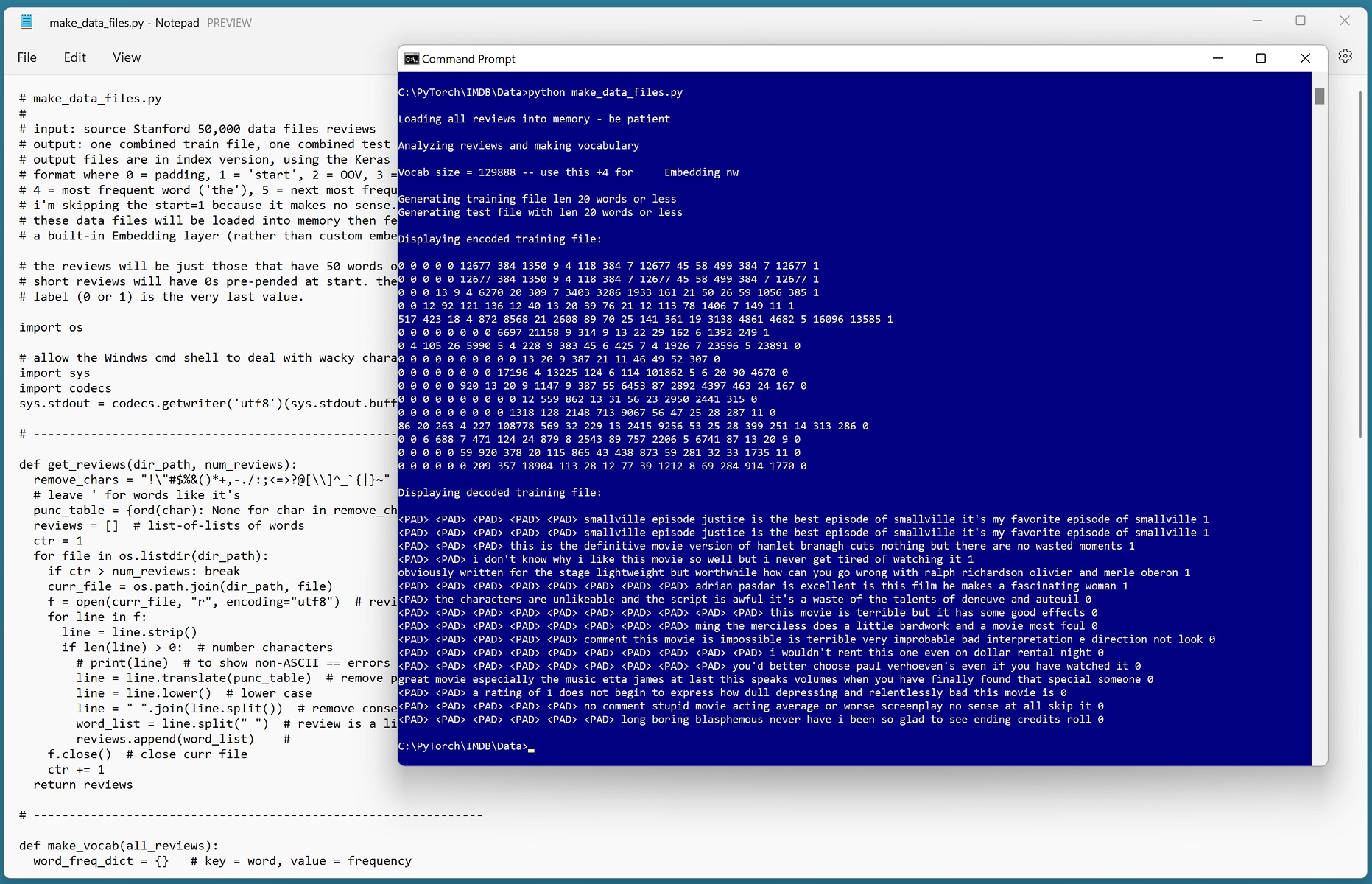

Preparing IMDB Movie Review Data for NLP Experiments -- Visual

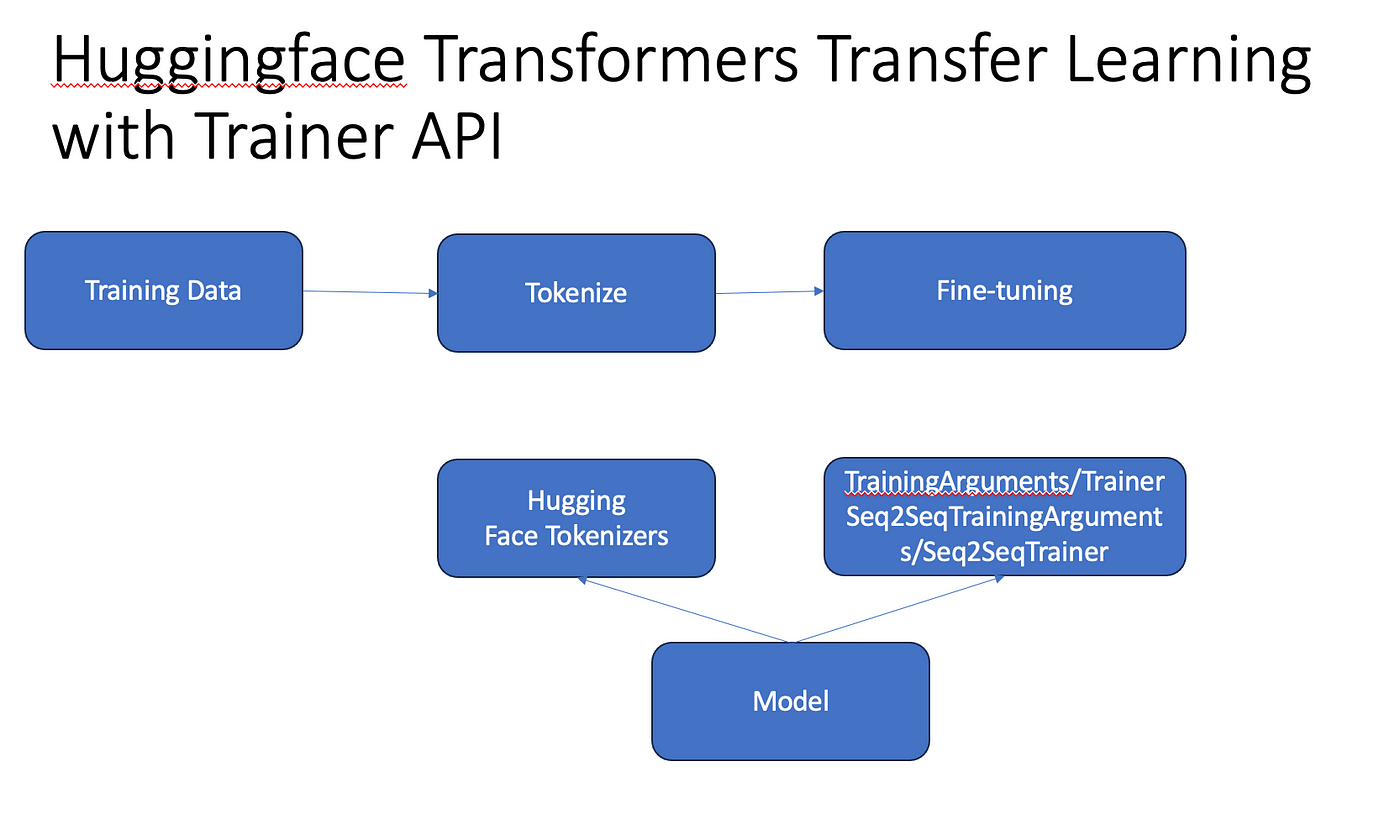

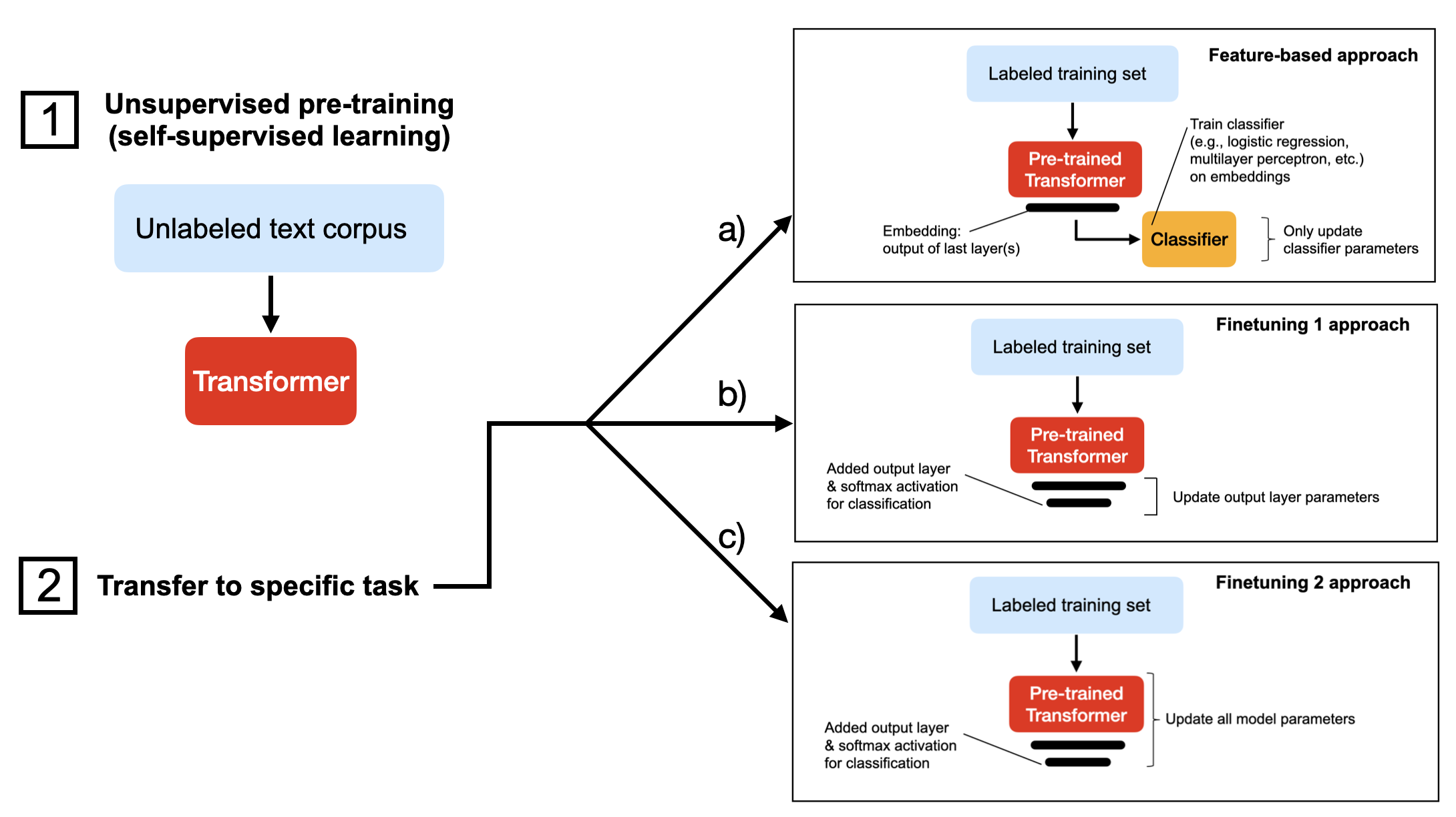

Transfer learning with Transformers trainer and pipeline for NLP

How to Fine-tune HuggingFace BERT model for Text Classification

PyTorch on Google Cloud: How To train PyTorch models on AI

K_1.1. Tokenized Inputs Outputs - Transformer, T5_EN - Deep

Transformers from scratch

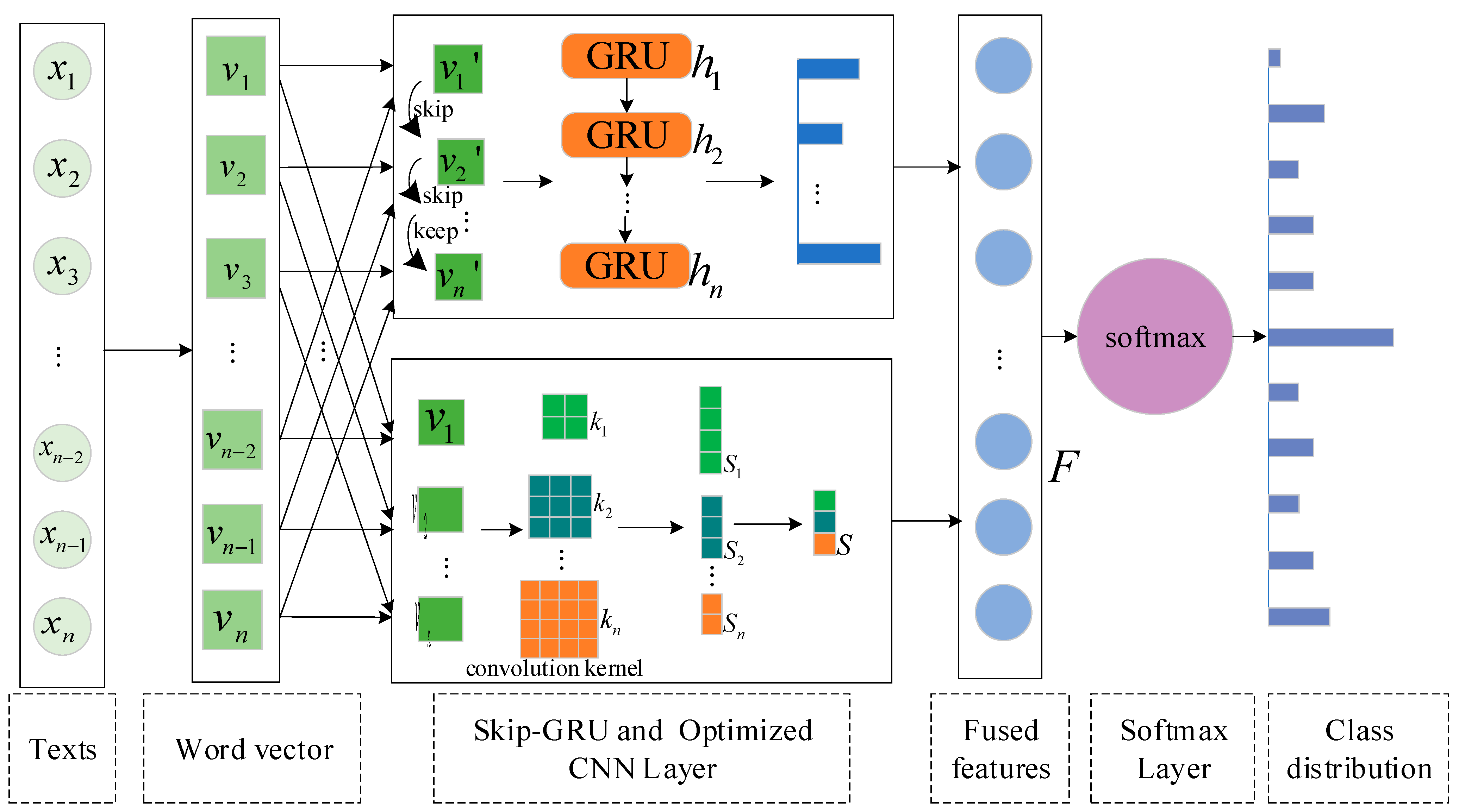

Applied Sciences, Free Full-Text

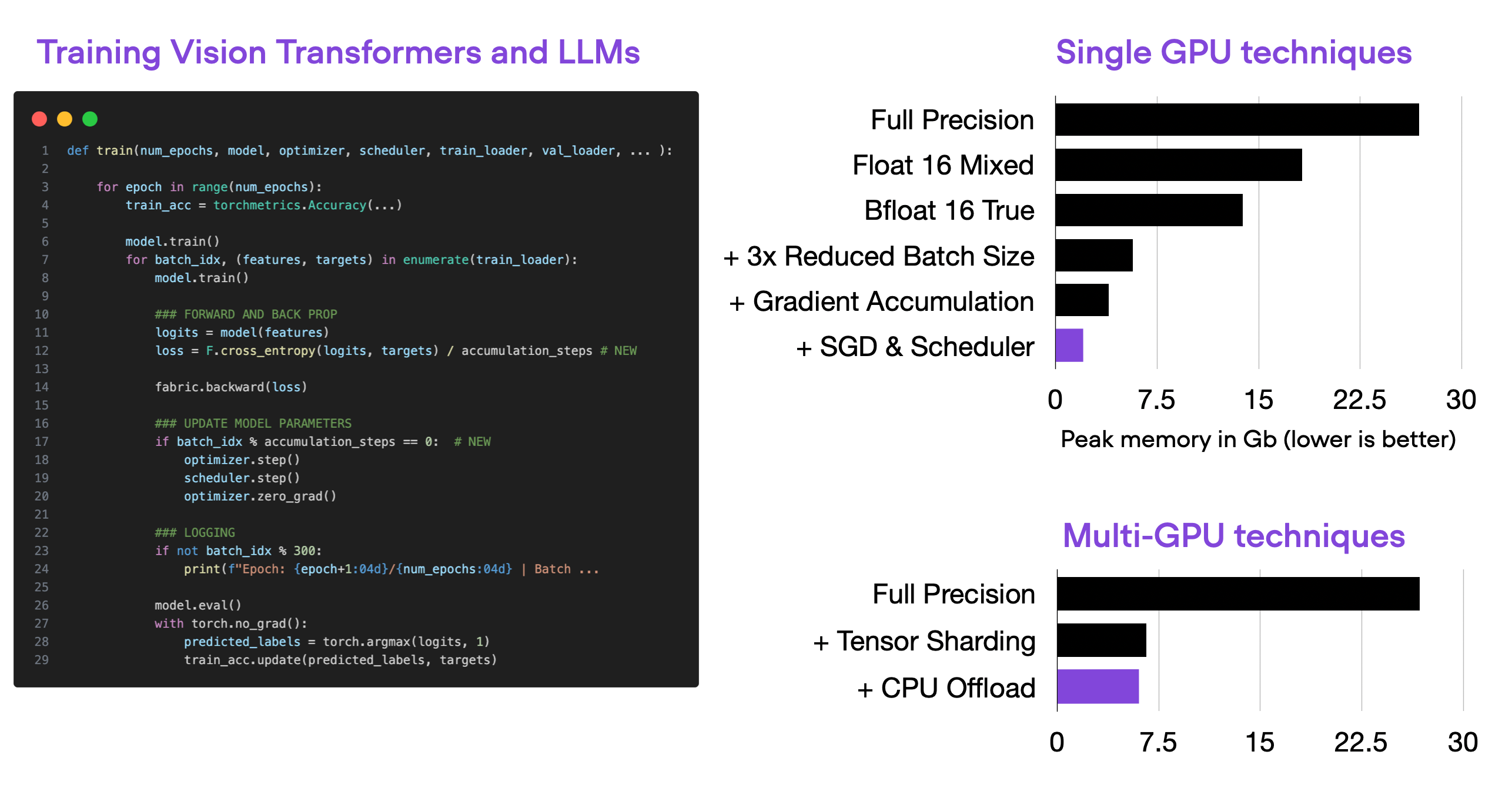

Some Techniques To Make Your PyTorch Models Train (Much) Faster

Optimizing Memory Usage for Training LLMs and Vision Transformers

PyTorch on Google Cloud: How To train and tune PyTorch models on

Recomendado para você

-

imdb · Datasets at Hugging Face31 dezembro 2024

imdb · Datasets at Hugging Face31 dezembro 2024 -

One Piece Film Z (2012) - IMDb31 dezembro 2024

One Piece Film Z (2012) - IMDb31 dezembro 2024 -

Attack On Titan' To 'One Piece', Highest-Rated Anime Series On IMDb31 dezembro 2024

Attack On Titan' To 'One Piece', Highest-Rated Anime Series On IMDb31 dezembro 2024 -

One Piece Movies (In Order) with IMDB and MyAnimeList Scores!31 dezembro 2024

One Piece Movies (In Order) with IMDB and MyAnimeList Scores!31 dezembro 2024 -

10 Best 'One Piece' Episodes, According to IMDb31 dezembro 2024

10 Best 'One Piece' Episodes, According to IMDb31 dezembro 2024 -

Ratings- Attack on Titan / One Piece / Bleach31 dezembro 2024

Ratings- Attack on Titan / One Piece / Bleach31 dezembro 2024 -

Fórum Dublanet31 dezembro 2024

-

6 Best Shōnen Anime to watch in 2023 if you love Jujutsu Kaisen, One Piece and Demon Slayer31 dezembro 2024

6 Best Shōnen Anime to watch in 2023 if you love Jujutsu Kaisen, One Piece and Demon Slayer31 dezembro 2024 -

The Chucky of Gen Z: Twitter goes berserk as Universal reportedly considering potential M3GAN sequel31 dezembro 2024

The Chucky of Gen Z: Twitter goes berserk as Universal reportedly considering potential M3GAN sequel31 dezembro 2024 -

first 100 episodes of one piece|TikTok Search31 dezembro 2024

first 100 episodes of one piece|TikTok Search31 dezembro 2024

você pode gostar

-

Wallpaper Hora de Aventura para Celular - Adventure Time31 dezembro 2024

Wallpaper Hora de Aventura para Celular - Adventure Time31 dezembro 2024 -

Stray Kids Cancel Global Citizen Set After 'Minor' Car Accident31 dezembro 2024

Stray Kids Cancel Global Citizen Set After 'Minor' Car Accident31 dezembro 2024 -

ArtStation - Drangleic Dark Souls II Fan Art31 dezembro 2024

ArtStation - Drangleic Dark Souls II Fan Art31 dezembro 2024 -

Rin Nohara - Wallpaper and Scan Gallery - Minitokyo31 dezembro 2024

Rin Nohara - Wallpaper and Scan Gallery - Minitokyo31 dezembro 2024 -

4 Modelos de All Star Feminino Para Chamar de Seu!31 dezembro 2024

4 Modelos de All Star Feminino Para Chamar de Seu!31 dezembro 2024 -

Lâmpada de parede para salão de beleza com personalização do seu31 dezembro 2024

Lâmpada de parede para salão de beleza com personalização do seu31 dezembro 2024 -

Demon's Souls Boss Battles - The Most Visually Striking31 dezembro 2024

-

Como desemperrar destravar ziper travado FÁCIL e RÁPIDO - en 202331 dezembro 2024

Como desemperrar destravar ziper travado FÁCIL e RÁPIDO - en 202331 dezembro 2024 -

Is 'After the Rain' (anime) worth watching? - Quora31 dezembro 2024

-

Valvrave the Liberator, Dengeki Wiki31 dezembro 2024

Valvrave the Liberator, Dengeki Wiki31 dezembro 2024