RL Weekly 36: AlphaZero with a Learned Model achieves SotA in Atari

Por um escritor misterioso

Last updated 08 novembro 2024

In this issue, we look at MuZero, DeepMind’s new algorithm that learns a model and achieves AlphaZero performance in Chess, Shogi, and Go and achieves state-of-the-art performance on Atari. We also look at Safety Gym, OpenAI’s new environment suite for safe RL.

Johan Gras (@gras_johan) / X

RL Weekly 9: Sample-efficient Near-SOTA Model-based RL, Neural MMO, and Bottlenecks in Deep Q-Learning

UC Berkeley Reward-Free RL Beats SOTA Reward-Based RL

Scheduling UAV Swarm with Attention-based Graph Reinforcement Learning for Ground-to-air Heterogeneous Data Communication

State of AI Report 2023 - Air Street Capital

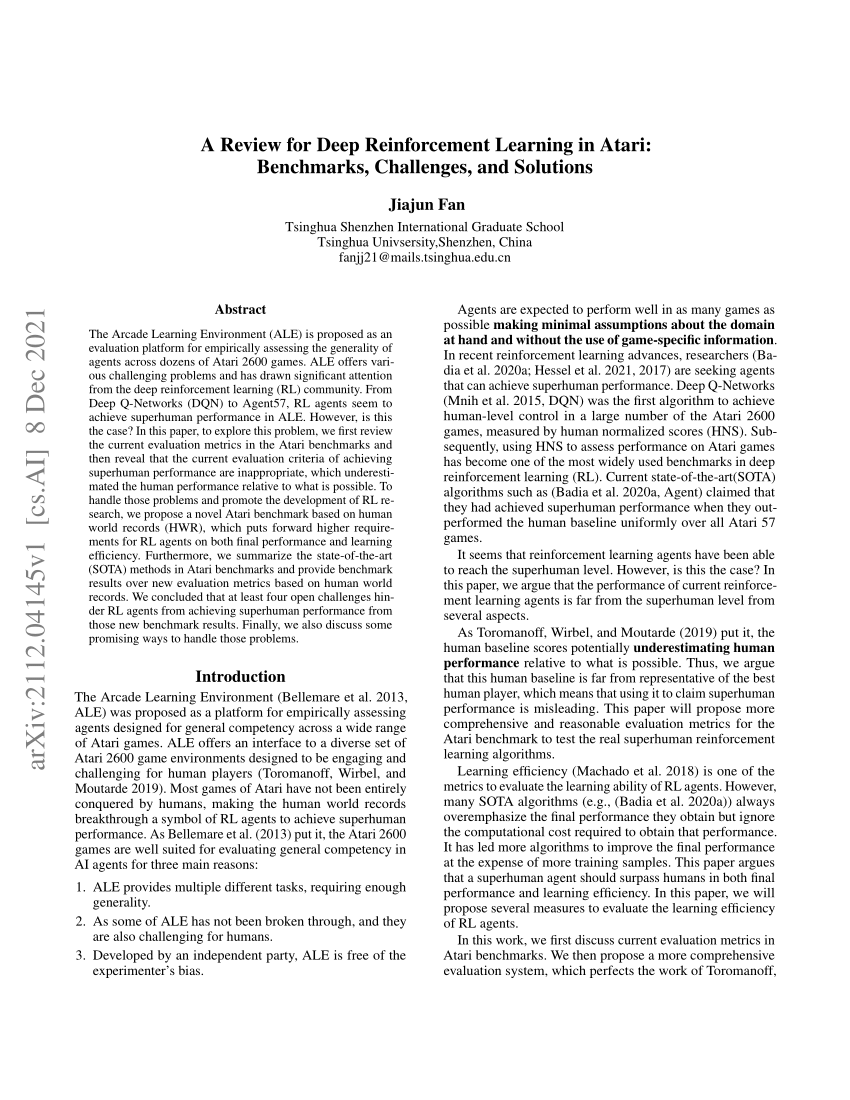

PDF) A Review for Deep Reinforcement Learning in Atari:Benchmarks, Challenges, and Solutions

PDF) On Reinforcement Learning for the Game of 2048

Applied Sciences, Free Full-Text

Kristian Kersting

Home

RL Weekly

2008.06495] Joint Policy Search for Multi-agent Collaboration with Imperfect Information

ICLR 2022

Recomendado para você

-

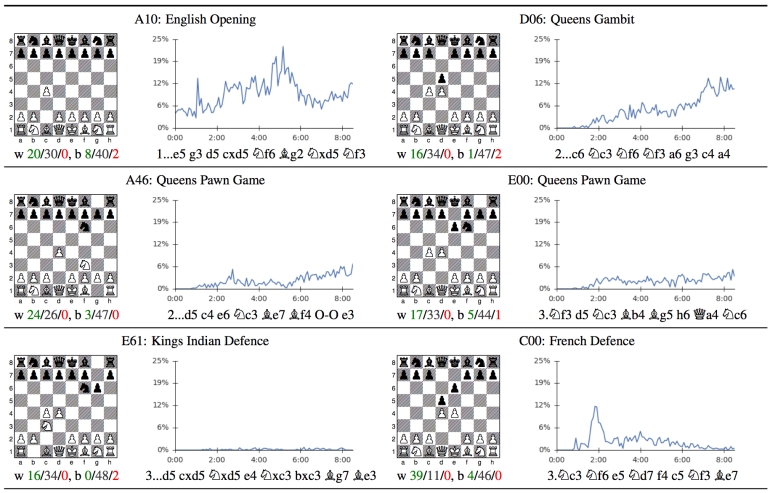

How Does AlphaZero Play Chess?08 novembro 2024

How Does AlphaZero Play Chess?08 novembro 2024 -

Checkmate: how we mastered the AlphaZero cover, Science08 novembro 2024

Checkmate: how we mastered the AlphaZero cover, Science08 novembro 2024 -

Comparison of network architecture of AlphaZero and NoGoZero+ (508 novembro 2024

-

AlphaZero learns to solve quantum problems - ΑΙhub08 novembro 2024

AlphaZero learns to solve quantum problems - ΑΙhub08 novembro 2024 -

AlphaZero learns human concepts08 novembro 2024

AlphaZero learns human concepts08 novembro 2024 -

Multiplayer AlphaZero – arXiv Vanity08 novembro 2024

Multiplayer AlphaZero – arXiv Vanity08 novembro 2024 -

Deepmind's AlphaZero Plays Chess08 novembro 2024

Deepmind's AlphaZero Plays Chess08 novembro 2024 -

AlphaZero - Notes on AI08 novembro 2024

AlphaZero - Notes on AI08 novembro 2024 -

AlphaZero just wants to play08 novembro 2024

AlphaZero just wants to play08 novembro 2024 -

Chessmasters praise AlphaZero AI games and says it has an aggressive playing style08 novembro 2024

Chessmasters praise AlphaZero AI games and says it has an aggressive playing style08 novembro 2024

você pode gostar

-

Wise Christmas Mystical Tree Poster for Sale by TheBigSadShop08 novembro 2024

Wise Christmas Mystical Tree Poster for Sale by TheBigSadShop08 novembro 2024 -

Emissão Postal Especial Xadrez08 novembro 2024

Emissão Postal Especial Xadrez08 novembro 2024 -

Roblox Icon Blank Template - Imgflip08 novembro 2024

Roblox Icon Blank Template - Imgflip08 novembro 2024 -

mast game - Classic Game by saurabhxxx - Play Free, Make a Game Like This08 novembro 2024

mast game - Classic Game by saurabhxxx - Play Free, Make a Game Like This08 novembro 2024 -

Saia Rosa Cute Vestido Pequeno Ilustração PNG , Cartoon Saia, Roupas Para Crianças, Produtos De Bebê Imagem PNG e Vetor Para Download Gratuito08 novembro 2024

Saia Rosa Cute Vestido Pequeno Ilustração PNG , Cartoon Saia, Roupas Para Crianças, Produtos De Bebê Imagem PNG e Vetor Para Download Gratuito08 novembro 2024 -

What is your favorite Jojo pose?08 novembro 2024

What is your favorite Jojo pose?08 novembro 2024 -

Interview - Ready at Dawn's Ru Weerasuriya on God of War: Ghost of08 novembro 2024

Interview - Ready at Dawn's Ru Weerasuriya on God of War: Ghost of08 novembro 2024 -

All of Us Are Dead Série - onde assistir grátis08 novembro 2024

All of Us Are Dead Série - onde assistir grátis08 novembro 2024 -

486 - Regigigas by SpinoOne on DeviantArt08 novembro 2024

486 - Regigigas by SpinoOne on DeviantArt08 novembro 2024 -

Bruno diferente mais um dia da sua caminhada para nos animar - iFunny Brazil08 novembro 2024

Bruno diferente mais um dia da sua caminhada para nos animar - iFunny Brazil08 novembro 2024